Call me sexist, but...: Revisiting Sexism Detection Using Psychological Scales and Adversarial Samples.

Abstract

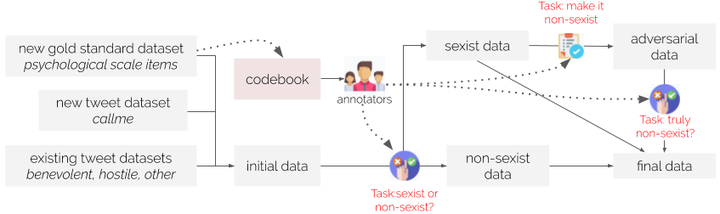

Research has focused on automated methods to effectively detect sexism online. Although overt sexism seems easy to spot, its subtle forms and manifold expressions are not. In this paper, we outline the different dimensions of sexism by grounding them in their implementation in psychological scales. From the scales, we derive a codebook for sexism in social media, which we use to annotate existing and novel datasets, surfacing their limitations in breadth and validity with respect to the construct of sexism. Next, we leverage the annotated datasets to generate adversarial examples, and test the reliability of sexism detection methods. Results indicate that current machine learning models pick up on a very narrow set of linguistic markers of sexism and do not generalize well to out-of-domain examples. Yet, including diverse data and adversarial examples at training time results in models that generalize better and that are more robust to artifacts of data collection. By providing a scale-based codebook and insights regarding the shortcomings of the state-of-the-art, we hope to contribute to the development of better and broader models for sexism detection, including reflections on theory-driven approaches to data collection.